Difference between revisions of "How to install Percona XtraDB Cluster"

(→Test 1) |

(→Test 1) |

||

| Line 510: | Line 510: | ||

[[File:Haproxy stats3.JPG|400|]] | [[File:Haproxy stats3.JPG|400|]] | ||

| − | + | ||

| − | + | | 101358 | sbtest | web2004.dfw.ppnet:35369 | sbtest | Sleep | 0 | | NULL | 0 | | |

| − | + | 0 | | |

| − | | | + | | 101359 | sbtest | web2004.dfw.ppnet:35375 | sbtest | Sleep | 0 | | NULL | 1 | |

| − | + | 1 | | |

| − | | | + | +--------+-------------+-------------------------+--------+---------+-------+--------------------------------------+------------------+-----------+- |

| − | + | --------------+ | |

| − | + | 12 rows in set (0.00 sec) | |

| − | |||

| − | +-------+-------------+-------------------------+--------+---------+-------+------------------------------------------------- | ||

| − | |||

| − | |||

| − | |||

===Test 2=== | ===Test 2=== | ||

===Test 3=== | ===Test 3=== | ||

===Test 4=== | ===Test 4=== | ||

Revision as of 20:22, 21 February 2018

For this tutorial, we will be installing:

- Percona-XetraDB-cluster 5.7

- Haproxy version 1.6.3

- sysbench version 1.0.12

Prerequisites

To complete this tutorial, you'll need the following:

- Four(4) Debian 9(Stretch) servers

This lab has been tested also on Ubuntu 14.04 and Xenial 16.04.

| Servername | IP adress | Node Role |

| db1 | 10.192.16.58 | First db server |

| db2 | 10.192.16.59 | Second db server |

| db3 | 10.192.16.60 | Third db server |

| web1 | 10.192.16.61 | Test client |

STEP 1- Install Debian 9 on all 4 servers

Make sure that you install Debian 9 on all servers and all servers are up to date by runnimg the commands below after the install.

sudo apt-get update

sudo apt-get -y upgrade

Note: If you are not running a DNS server in your environment, make sure you update you /etc/hosts file on each node.

127.0.0.1 localhost 10.192.16.58 db1 10.192.16.59 db2 10.192.16.60 db3 10.192.16.61 web1 # The following lines are desirable for IPv6 capable hosts ::1 localhost ip6-localhost ip6-loopback ff02::1 ip6-allnodes ff02::2 ip6-allrouters

STEP 2- Install Percona_XtraDB-Cluster on db1,db2 and db3

- Login as root

- Use "vim" or "nano" to create a new file "percona.sh".

- Copy the script below and paste it into the new file.

Percona install script:

#!/bin/bash #update all nodes sudo apt-get update sudo apt-get -y upgrade #Install Percon-Xtradb-cluster sudo apt-get -y install wget cd /tmp/ wget https://repo.percona.com/apt/percona-release_0.1-5.$(lsb_release -sc)_all.deb sudo dpkg -i percona-release_0.1-5.$(lsb_release -sc)_all.deb sudo apt-get update sudo apt-get -y install percona-xtradb-cluster-57 #Stop mysqld on all nodes sudo systemctl stop mysql

- Save the file and close it

- Make the file executable

chmod +x percona.sh

- Run the script

./percona.sh

During the install, you will be asked to enter the "mysql root password". Enter a password

Use the same password for all 3 nodes

- After the install complete, check mysql is not running on all node.The script should stop mysql, but it is always good to check.

systemctl status mysql Active: inactive (dead)

if mysql is running stop it with:

systemctl stop mysql

STEP 3- Configue Percona_XtraDB-Cluster

Now is time to configure the servers.It is best to start on the first node (db1) open the file /etc/mysql/my.cnf The file will look like this

# # The Percona XtraDB Cluster 5.7 configuration file. # # # * IMPORTANT: Additional settings that can override those from this file! # The files must end with '.cnf', otherwise they'll be ignored. # Please make any edits and changes to the appropriate sectional files # included below. # !includedir /etc/mysql/conf.d/ !includedir /etc/mysql/percona-xtradb-cluster.conf.d/

At the end of the file add the following lines

[mysqld] server_id=1 datadir=/var/lib/mysql user=mysql # Path to Galera library wsrep_provider=/usr/lib/libgalera_smm.so # Cluster connection URL contains the IPs of node#1, node#2 and node#3 wsrep_cluster_address=gcomm://10.192.16.58,10.192.16.59,10.192.16.60 # In order for Galera to work correctly binlog format should be ROW binlog_format=ROW # MyISAM storage engine has only experimental support default_storage_engine=InnoDB # This InnoDB autoincrement locking mode is a requirement for Galera innodb_autoinc_lock_mode=2 # Node address and name wsrep_node_address=10.192.16.58 wsrep_node_name=db1 # SST method wsrep_sst_method=xtrabackup-v2 # Cluster name wsrep_cluster_name=ppnet_cluster # Authentication for SST method wsrep_sst_auth="sstuser:password"

Once done save the file

db1 configuration file

# # The Percona XtraDB Cluster 5.7 configuration file. # # # * IMPORTANT: Additional settings that can override those from this file! # The files must end with '.cnf', otherwise they'll be ignored. # Please make any edits and changes to the appropriate sectional files # included below. # !includedir /etc/mysql/conf.d/ !includedir /etc/mysql/percona-xtradb-cluster.conf.d/ [mysqld] server_id=1 datadir=/var/lib/mysql user=mysql # Path to Galera library wsrep_provider=/usr/lib/libgalera_smm.so # Cluster connection URL contains the IPs of node#1, node#2 and node#3 wsrep_cluster_address=gcomm://10.192.16.58,10.192.16.59,10.192.16.60 # In order for Galera to work correctly binlog format should be ROW binlog_format=ROW # MyISAM storage engine has only experimental support default_storage_engine=InnoDB # This InnoDB autoincrement locking mode is a requirement for Galera innodb_autoinc_lock_mode=2 # Node address and name wsrep_node_address=10.192.16.58 wsrep_node_name=db1 # SST method wsrep_sst_method=xtrabackup-v2 # Cluster name wsrep_cluster_name=ppnet_cluster # Authentication for SST method wsrep_sst_auth="sstuser:password"

db2 configuration file

# # The Percona XtraDB Cluster 5.7 configuration file. # # # * IMPORTANT: Additional settings that can override those from this file! # The files must end with '.cnf', otherwise they'll be ignored. # Please make any edits and changes to the appropriate sectional files # included below. # !includedir /etc/mysql/conf.d/ !includedir /etc/mysql/percona-xtradb-cluster.conf.d/ [mysqld] server_id=2 datadir=/var/lib/mysql user=mysql # Path to Galera library wsrep_provider=/usr/lib/libgalera_smm.so # Cluster connection URL contains the IPs of node#1, node#2 and node#3 wsrep_cluster_address=gcomm://10.192.16.58,10.192.16.59,10.192.16.60 # In order for Galera to work correctly binlog format should be ROW binlog_format=ROW # MyISAM storage engine has only experimental support default_storage_engine=InnoDB # This InnoDB autoincrement locking mode is a requirement for Galera innodb_autoinc_lock_mode=2 # Node address and name wsrep_node_address=10.192.16.59 wsrep_node_name=db2 # SST method wsrep_sst_method=xtrabackup-v2 # Cluster name wsrep_cluster_name=ppnet_cluster # Authentication for SST method wsrep_sst_auth="sstuser:password"

db3 configuration file

# # The Percona XtraDB Cluster 5.7 configuration file. # # # * IMPORTANT: Additional settings that can override those from this file! # The files must end with '.cnf', otherwise they'll be ignored. # Please make any edits and changes to the appropriate sectional files # included below. # !includedir /etc/mysql/conf.d/ !includedir /etc/mysql/percona-xtradb-cluster.conf.d/ [mysqld] server_id=3 datadir=/var/lib/mysql user=mysql # Path to Galera library wsrep_provider=/usr/lib/libgalera_smm.so # Cluster connection URL contains the IPs of node#1, node#2 and node#3 wsrep_cluster_address=gcomm://10.192.16.58,10.192.16.59,10.192.16.60 # In order for Galera to work correctly binlog format should be ROW binlog_format=ROW # MyISAM storage engine has only experimental support default_storage_engine=InnoDB # This InnoDB autoincrement locking mode is a requirement for Galera innodb_autoinc_lock_mode=2 # Node address and name wsrep_node_address=10.192.16.60 wsrep_node_name=db3 # SST method wsrep_sst_method=xtrabackup-v2 # Cluster name wsrep_cluster_name=ppnet_cluster # Authentication for SST method wsrep_sst_auth="sstuser:password"

After editing /etc/mysql/my.cnf, it is time to bootstrap the first node.

Note: For the first node, it is also possible to not pass any values to wsrep_cluster_address=gcomm and bootstrap the node

wsrep_cluster_address=gcomm://

If you decide to use this option, make sure that for node 2 and node3 the value for wsrep_cluster_address=gcomm is the IP address of the first node

wsrep_cluster_address=gcomm://10.192.16.58

STEP 4- Bootstrap the first node (db1)

Use the command below to bootstrap the first node

/etc/init.d/mysql bootstrap-pxc

if everything goes well you will get the message below

[ ok ] Bootstrapping Percona XtraDB Cluster database server: mysqld ..

- Login to mysql

mysql -p

- Enter the passwword used during installation

- Type

show status like "wsrep_cluster_size";

You should see 1 for the cluster size value

+--------------------+-------+ | Variable_name | Value | +--------------------+-------+ | wsrep_cluster_size | 1 | +--------------------+-------+ 1 row in set (0.00 sec)

So far everything is going well. Before we start the second and third node, we need to create the sst user we setup in the my.cnf file

wsrep_sst_auth="sstuser:password"

- Login again to mysql

- run the commands below to creast the sst user

CREATE USER 'sstuser'@'localhost' IDENTIFIED BY 'password'; GRANT PROCESS, RELOAD, LOCK TABLES, REPLICATION CLIENT ON *.* TO 'sstuser'@'localhost'; FLUSH PRIVILEGES;

Note: In a production environment, make sure to note use "password" for password

STEP 5- Start mysql on the second and third node

For the second and third node it is very simple. Just run the command before

systemctl start mysql

If you get no errors, that means we have added the node to the cluster.

To check, run: show status like "wsrep_cluster_size"; on any node. The cluster size value should be now 3

mysql> show status like "wsrep_cluster_size"; +--------------------+-------+ | Variable_name | Value | +--------------------+-------+ | wsrep_cluster_size | 3 | +--------------------+-------+ 1 row in set (0.00 sec)

The cluster setup is now complete, but before we move to the next set-up, we need to prepare the cluster to work with haproxy.

Since MYSQL is checked via httpchk, there is an utility that comes with Percona XtraDB Cluster called "clustercheck" that enbale haproxy

to check MYSQL via http. For now "clustercheck" is not set up yet. We can verify that by running the command on any node in the cluster.

login to any node and as root run clustercheck. The output will be:

HTTP/1.1 503 Service Unavailable Content-Type: text/plain Connection: close Content-Length: 57

Setup clustercheck

Setting up clustercheck is very simple

- setup 1

- Create the clustercheck user

GRANT PROCESS ON *.* TO 'clustercheckuser'@'localhost' IDENTIFIED BY 'clustercheckpassword!';

Now if we run clustercheck on any node the output will be

HTTP/1.1 200 OK Content-Type: text/plain Connection: close Content-Length: 40

on all the nodes, install xinetd if it is not installed

- setup 2

- install xinetd

sudo apt-get install xinetd

Restart the service

sudo systemctl restart xinetd

Note: you can add those two lines at the end of the persona.sh script so while installing the Percona Xtradb cluster you install also xinetd. the script will look like this:

#!/bin/bash #update all nodes sudo apt-get update sudo apt-get -y upgrade #Install Percon-Xtradb-cluster sudo apt-get -y install wget cd /tmp/ wget https://repo.percona.com/apt/percona-release_0.1-5.$(lsb_release -sc)_all.deb sudo dpkg -i percona-release_0.1-5.$(lsb_release -sc)_all.deb sudo apt-get update sudo apt-get -y install percona-xtradb-cluster-57 #Stop mysqld on all nodes sudo systemctl stop mysql sudo apt-get -y install xinetd sudo systemctl restart xinetd

Now our cluster is ready, it is time to set up the "test client"

STEP 6- Install the test client node (web1)

Install Haproxy

Run the command below to instal Haproxy

sudo apt-get -y install haproxy

Configure Haproxy

Open the configuration file /etc/haproxy/haproxy.cfg it will look like the file below

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# Default ciphers to use on SSL-enabled listening sockets.

# For more information, see ciphers(1SSL). This list is from:

# https://hynek.me/articles/hardening-your-web-servers-ssl-ciphers/

ssl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:ECDH+3DES:DH+3DES:RSA+AESGCM:RSA+AES:RSA+3DES:!aNULL:!MD5:!DSS

ssl-default-bind-options no-sslv3

Add this to the file.

#First part

listen stats

#Listen on port 9000

bind :9000

#This is the virtual URL to access the stats page

stats uri /haproxy_stats

stats enable

#login: username and password

stats auth yourusernamehere:yourpasswordhere

#This allows you to take down and bring up back end servers

stats admin if TRUE

#Second part

frontend pxc-front

bind *:3307

mode tcp

default_backend pxc-back

backend pxc-back

mode tcp

balance roundrobin

option httpchk

server db1 10.192.16.58:3306 check port 9200 inter 400 rise 3 fall 3

server db2 10.192.16.59:3306 check port 9200 inter 400 rise 3 fall 3

server db3 10.192.16.60:3306 check port 9200 inter 400 rise 3 fall 3

#Third part

frontend pxc-onenode-front

bind *:3306

mode tcp

default_backend pxc-onenode-back

backend pxc-onenode-back

mode tcp

balance roundrobin

option httpchk

server db1 10.192.16.58:3306 check port 9200 inter 400 rise 3 fall 3

server db2 10.192.16.59:3306 check port 9200 inter 400 rise 3 fall 3 backup

server db3 10.192.16.60:3306 check port 9200 inter 400 rise 3 fall 3 backup

- Save and close the file

- restart the service

sudo systemctl restart haproxy

- Configuration explanation

The configuration file has 3 parts

This first part is about showing the stats on the page

The second part will balance the load to all three nodes, if the application uses port 3307

The third part will set db1 as active node, db2 and db3 as backup nodes. This means, when the application uses port 3306 it will write only to db1

If db1 failed or no longer available, db2 will take over. db1 doesn't have to be the active node it can be any node like db2.I just pick db1 for this tutorial

server db1 10.192.16.58:3306 check port 9200 inter 400 rise 3 fall 3 backup server db2 10.192.16.59:3306 check port 9200 inter 400 rise 3 fall 3 server db3 10.192.16.60:3306 check port 9200 inter 400 rise 3 fall 3 backup

Note:

In the 3 nodes configuration, only 1 node in the cluster is allow to fail.If one node failed we can stay read and write to only cluster.

If we want to have 2 nodes to failed and stay be able to read and write to our cluster, we need to have a 5 nodes cluster.

- Open a browser and type

10.192.16.61:9000/haproxy_stats

- Enter your username and password

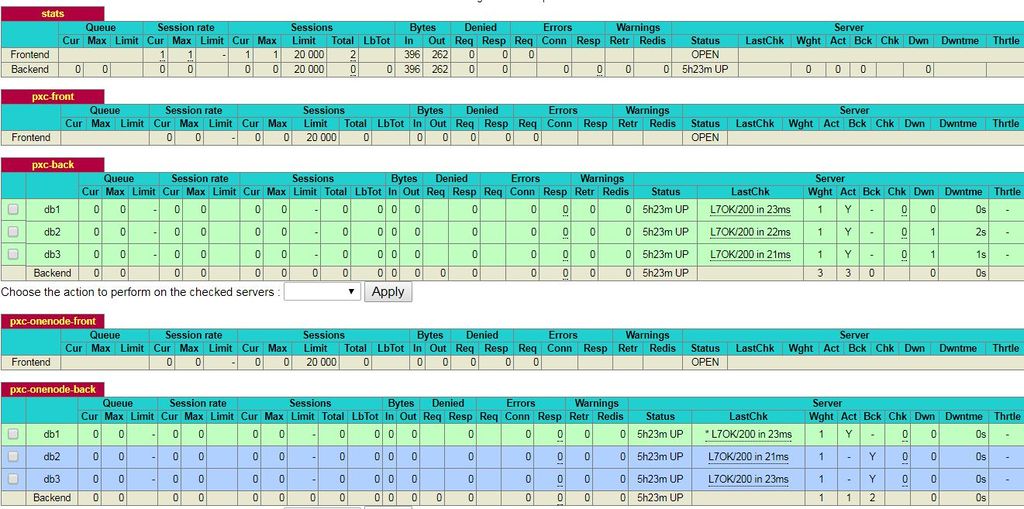

This will open up the haproxy GUI. It should look like the image below.

Testing

Since we will be using sysbench to run our test on the cluster, we need to create the sbtest user and the database. You can call the user whatever you want.

Install sysbench

Run the command below to install sysbench (This needs to be done only on the test node)

curl -s https://packagecloud.io/install/repositories/akopytov/sysbench/script.deb.sh | sudo bash sudo apt -y install sysbench

If curl is not installed, run

sudo apt-get -y install curl

Create the sbtest user

Login to any node in the cluster and run the command below to create the sbtest user and the database. (you can change the password if you want)

create database sbtest; grant all on sbtest.* to 'sbtest'@'%' identified by 'sbpass'; flush privileges;

Add some data to the table:

Use the command below

sysbench --test=/usr/share/sysbench/tests/include/oltp_legacy/oltp.lua --db-driver=mysql --mysql-engine-trx=yes --mysql-table-engine=innodb \ --mysql-host=127.0.0.1 --mysql-port=3307 --mysql-user=sbtest --mysql-password=sbpass --oltp-table-size=10000 prepare

Run the test

We are going to run four(4) tests

- A test on port 3307: which will balance the load on all 3 nodes

- A test on port 3306: which will write only to db1

- A test on port 3307: then kill mysql on db1

- A test on port 3306: then kill mysql on db1

For all four(4) test with will need two(2) scripts

one script for port 3307 and another script for port 3306

We are going to call the scripts: 3307.sh and 3306.sh. As root login to the test node and create 2 files 3306.sh and 3307.sh

See below for both scripts

Script for port 3307

#!/bin/bash

while true ; do sysbench --test=/usr/share/sysbench/tests/include/oltp_legacy/oltp.lua \

--db-driver=mysql \

--mysql-engine-trx=yes \

--mysql-table-engine=innodb \

--mysql-host=127.0.0.1 \

--mysql-port=3307 \

--mysql-user=sbtest \

--mysql-password=sbpass \

--oltp-table-size=10000 \

--report-interval=1 \

--num-threads=8 \

--reqests=0 \

--time=0 \

run ; sleep 1; done

Script for port 3306

#!/bin/bash

while true ; do sysbench --test=/usr/share/sysbench/tests/include/oltp_legacy/oltp.lua \

--db-driver=mysql \

--mysql-engine-trx=yes \

--mysql-table-engine=innodb \

--mysql-host=127.0.0.1 \

--mysql-port=3306 \

--mysql-user=sbtest \

--mysql-password=sbpass \

--oltp-table-size=10000 \

--report-interval=1 \

--num-threads=8 \

--reqests=0 \

--time=0 \

run ; sleep 1; done

Make both scripts executable

chmod +x 3306.sh chmod +x 3307.sh

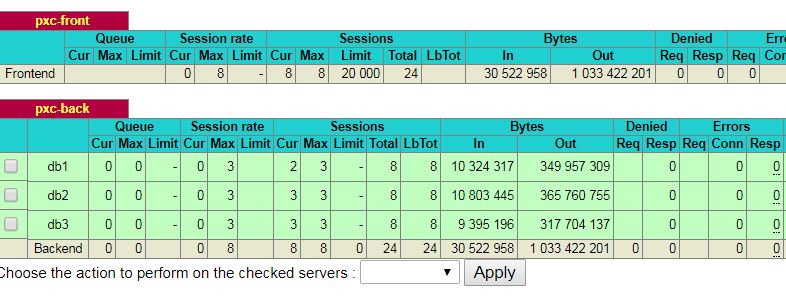

Test 1

For all test, we will be running a total of 8 threads. If we run the first test, we should see the haproxy balancing the load

to all 3 nodes. Since we have 8 threads, a possible scenario will be one node will have 2 connections and the other nodes will have each

3 connections. That makes it a total of 8 connections which equals to our 8 threads from the test .

Here is the out put for test one:

| 101358 | sbtest | web2004.dfw.ppnet:35369 | sbtest | Sleep | 0 | | NULL | 0 | 0 | | 101359 | sbtest | web2004.dfw.ppnet:35375 | sbtest | Sleep | 0 | | NULL | 1 | 1 | +--------+-------------+-------------------------+--------+---------+-------+--------------------------------------+------------------+-----------+- --------------+ 12 rows in set (0.00 sec)