Difference between revisions of "How to install MYSQL NDB cluster"

| (62 intermediate revisions by the same user not shown) | |||

| Line 7: | Line 7: | ||

* mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64 | * mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64 | ||

| − | Naming | + | ===Naming === |

db2004.dfw.ppnet = 1st SQL node | db2004.dfw.ppnet = 1st SQL node | ||

| Line 19: | Line 19: | ||

db2008.dfw.ppnet= management node | db2008.dfw.ppnet= management node | ||

| − | Diagram | + | ===Diagram === |

[[file:Ndb_cluster.png]] | [[file:Ndb_cluster.png]] | ||

| Line 97: | Line 97: | ||

cd mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64 | cd mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64 | ||

cd bin | cd bin | ||

| − | sudo cp ndb_mgm /usr/bin/ | + | sudo cp ndb_mgm /usr/local/bin/ |

| − | sudo cp ndb_mgmd /usr/bin/ | + | sudo cp ndb_mgmd /usr/local/bin/ |

cd /usr/bin/ | cd /usr/bin/ | ||

sudo chmod 755 ndb_mg* | sudo chmod 755 ndb_mg* | ||

| Line 128: | Line 128: | ||

fi | fi | ||

#Start the manegement node | #Start the manegement node | ||

| − | echo"starting the manegement node" | + | echo "starting the manegement node" |

sleep 3 | sleep 3 | ||

cd | cd | ||

| Line 145: | Line 145: | ||

Set back and relax | Set back and relax | ||

| − | + | After the script complete type command | |

ndb_mgm | ndb_mgm | ||

| Line 168: | Line 168: | ||

id=5 (not connected, accepting connect from db2009.dfw.ppnet) | id=5 (not connected, accepting connect from db2009.dfw.ppnet) | ||

We can see that the management node is up and running and the data nodes and SQL nodes are stay down. In the next session we are going to setup the data nodes | We can see that the management node is up and running and the data nodes and SQL nodes are stay down. In the next session we are going to setup the data nodes | ||

| + | Note: If you are using UFW make sure that port 1186 is open on the mgmt node. | ||

==Data node== | ==Data node== | ||

| Line 174: | Line 175: | ||

#!/bin/bash | #!/bin/bash | ||

| + | file="my.cnf" | ||

#create a folder called files under /tmp/ | #create a folder called files under /tmp/ | ||

echo " Creating folder" | echo " Creating folder" | ||

| Line 209: | Line 211: | ||

#start the service | #start the service | ||

cd | cd | ||

| − | ndbd | + | sudo ndbd |

save the file and close it. Make the file executable | save the file and close it. Make the file executable | ||

sudo chmod +x data._node.sh | sudo chmod +x data._node.sh | ||

| Line 498: | Line 500: | ||

==How to add a new SQL or data node== | ==How to add a new SQL or data node== | ||

*Step 1 | *Step 1 | ||

| − | Before you add a new SQL or data node, make sure if you | + | Before you add a new SQL or data node, make sure if you are using an IP that the management node can ping the new node and the new node can ping also the management node. If you are using the FQDN or hostanme, make sure that name can be resolved by both the management node and the new node. |

*step 2 | *step 2 | ||

Login to your management node and stop the management node with the command "1 stop" 1 being the node ID | Login to your management node and stop the management node with the command "1 stop" 1 being the node ID | ||

| Line 562: | Line 564: | ||

2 data nodes you can shut down only 1 node | 2 data nodes you can shut down only 1 node | ||

3 data nodes you can shut down 2 nodes | 3 data nodes you can shut down 2 nodes | ||

| + | |||

| + | ==Scenario 1== | ||

| + | Now that we have our cluster fully setup, we are going to discuss about what happen at the application level. | ||

| + | |||

| + | [[File:Ndb1_cluster1.png]] | ||

| + | |||

| + | if we do have for example 2 application nodes (web1 and web2) running a simple application to create a new user, we will have to setup web1 to connect to SQL node 1 and web2 to connect to SQL node 2. In this scenario, if one of the SQL node is not available, the application connecting to that particular SQL node will fail. | ||

| + | |||

| + | ==Scenario 2== | ||

| + | |||

| + | [[File:Ndb_cluster2.png]] | ||

| + | |||

| + | To address the issue in scenario 1, we will have to add a haproxy node between the application and the SQL nodes. The application will connect to the haproxy node and the haproxy will balance the load to each SQL node. in case one of the SQL node is not available, the haproxy node knows how to connect all the application nodes to the available SQL node. The problem here is that, if the haproxy node is not availlable, the application nodes will fail. | ||

| + | |||

| + | ==Scenario 3== | ||

| + | [[File:Ndb_cluster3.png]] | ||

| + | |||

| + | To address the issue in scenario 2, we will add another haproxy node.The first haproxy will be in active mode and the second one in passive mode. We will setup heartbeat on both haproxy nodes and setup a virtual IP between both node. IF the active node goes down automatically, the passive node take over and become the active node and if the active node comes back up, it takes over the active node role and the passive node goes back to the passive mode role. | ||

=Setting up haproxy= | =Setting up haproxy= | ||

| + | ==Setting haproxy user on SQL nodes== | ||

| + | Login to your SQL nodes and run the commands below | ||

| + | |||

| + | create user 'haproxy_check_user'@'haproxy2001.dfw.ppnet'; | ||

| + | grant all privileges on *.* to 'root'@'haproxy2001.dfw.ppnet' identified by 'your_password' with grant option; | ||

| + | create user 'haproxy_check_user'@'haproxy2002.dfw.ppnet'; | ||

| + | grant all privileges on *.* to 'root'@'haproxy2002.dfw.ppnet' identified by 'your_password' with grant option; | ||

| + | |||

| + | ==Installing haproxy== | ||

| + | Login to both your haproxy servers and run the command | ||

| + | sudo apt-get install haproxy | ||

| + | |||

| + | ==Configuring haproxy== | ||

| + | Navigate to | ||

| + | /etc/haproxy | ||

| + | and open the haproxy configuration file "haproxy.cfg" | ||

| + | vi haproxy.cfg | ||

| + | Copy and paste the code below at the end of the file. This needs to be done on both haproxy servers | ||

| + | |||

| + | # HAProxy's stats | ||

| + | listen stats | ||

| + | bind 10.192.0.224:8880 | ||

| + | stats enable | ||

| + | stats hide-version | ||

| + | stats uri / | ||

| + | stats realm HAProxy Statistics | ||

| + | stats auth user_name:user_name_password | ||

| + | listen webfarm | ||

| + | bind 10.192.0.224:3306 | ||

| + | mode tcp | ||

| + | balance roundrobin | ||

| + | option mysql-check user haproxy_check | ||

| + | server SQL1 db2004.dfw.ppnet:3306 check fall 2 inter 1000 | ||

| + | server SQL2 db2009.dfw.ppnet:3306 check fall 2 inter 1000 | ||

| + | |||

| + | * stats auth = The username and password we will use to login to the haproxy WEB GUI | ||

| + | * 10.192.0.224 = The virtual IP address we will use for heartbeat in the next section | ||

| + | |||

| + | ==Installing heartbeat== | ||

| + | Login to both your haproxy servers and run the command | ||

| + | sudo apt-get install heartbeat | ||

| + | |||

| + | For the heartbeat there are a couple of files we need to work on. This needs to be done also on both haproxy servers | ||

| + | |||

| + | ==configuring heartbeat== | ||

| + | * On haproxy2001 | ||

| + | Navigate to /etc/heartbeat and open authkeys; use the link below to generate a md5 hash and past it into the file | ||

| + | https://www.md5hashgenerator.com/ | ||

| + | - authkeys | ||

| + | auth 3 | ||

| + | 3 md5 75cbfa4f4f9f0606171f7bc3c61905c7 - | ||

| + | Save and close the file. | ||

| + | |||

| + | The next file to open is "ha.cf" in the ucast session enter you NIC name and the ip address and at the bottom of the file put the name of both haproxy servers | ||

| + | - ha.cf | ||

| + | |||

| + | # | ||

| + | # keepalive: how many seconds between heartbeats | ||

| + | # | ||

| + | keepalive 2 | ||

| + | # | ||

| + | # deadtime: seconds-to-declare-host-dead | ||

| + | # | ||

| + | deadtime 10 | ||

| + | # | ||

| + | # What UDP port to use for udp or ppp-udp communication? | ||

| + | # | ||

| + | udpport 694 | ||

| + | bcast ens34 | ||

| + | mcast ens34 225.0.0.1 694 1 0 | ||

| + | <span style="color: red">ucast ens34 10.192.0.222 </span> | ||

| + | # What interfaces to heartbeat over? | ||

| + | #udp eth0 | ||

| + | # | ||

| + | # Facility to use for syslog()/logger (alternative to log/debugfile) | ||

| + | # | ||

| + | logfacility local0 | ||

| + | # | ||

| + | # Tell what machines are in the cluster | ||

| + | # node nodename ... -- must match uname -n | ||

| + | <span style="color: red">node haproxy2001</span> | ||

| + | <span style="color: red">node haproxy2002</span> | ||

| + | The last file to edit is "haresources. Here you have the option to use the haproxy2001 or the haproxy2002 name it really doesn't matter. But it has to be the same on both haproxy's, and 10.192.0.224 in my case is the Virtual IP, replace this with your IP. | ||

| + | |||

| + | - haresources | ||

| + | haproxy2001 10.192.0.224 | ||

| + | |||

| + | * On haproxy2002 | ||

| + | - authkeys | ||

| + | auth 3 | ||

| + | 3 md5 75cbfa4f4f9f0606171f7bc3c61905c7 - | ||

| + | |||

| + | - ha.cf | ||

| + | |||

| + | # | ||

| + | # keepalive: how many seconds between heartbeats | ||

| + | # | ||

| + | keepalive 2 | ||

| + | # | ||

| + | # deadtime: seconds-to-declare-host-dead | ||

| + | # | ||

| + | deadtime 10 | ||

| + | # | ||

| + | # What UDP port to use for udp or ppp-udp communication? | ||

| + | # | ||

| + | udpport 694 | ||

| + | bcast ens34 | ||

| + | mcast ens34 225.0.0.1 694 1 0 | ||

| + | <span style="color: red">ucast ens34 10.192.0.223 </span> | ||

| + | # What interfaces to heartbeat over? | ||

| + | #udp eth0 | ||

| + | # | ||

| + | # Facility to use for syslog()/logger (alternative to log/debugfile) | ||

| + | # | ||

| + | logfacility local0 | ||

| + | # | ||

| + | # Tell what machines are in the cluster | ||

| + | # node nodename ... -- must match uname -n | ||

| + | <span style="color: red">node haproxy2001</span> | ||

| + | <span style="color: red">node haproxy2002</span> | ||

| + | |||

| + | - haresources | ||

| + | haproxy2001 10.192.0.224 | ||

| + | |||

| + | We are done with the configuration | ||

| + | ===Checking=== | ||

| + | Login to your 1st haproxy server (haproxy2001) and type in sudo ifconfig | ||

| + | root@haproxy2001:~# sudo ifconfig | ||

| + | ens32 Link encap:Ethernet HWaddr 00:0c:29:97:0e:3b | ||

| + | inet addr:10.192.0.222 Bcast:10.192.0.255 Mask:255.255.255.0 | ||

| + | inet6 addr: fe80::20c:29ff:fe97:e3b/64 Scope:Link | ||

| + | UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 | ||

| + | RX packets:3406037 errors:0 dropped:628 overruns:0 frame:0 | ||

| + | TX packets:3515729 errors:0 dropped:0 overruns:0 carrier:0 | ||

| + | collisions:0 txqueuelen:1000 | ||

| + | RX bytes:861909566 (861.9 MB) TX bytes:868073629 (868.0 MB) | ||

| + | <span style="color: red">ens32:0 Link encap:Ethernet HWaddr 00:0c:29:97:0e:3b | ||

| + | inet addr:10.192.0.224 Bcast:10.192.0.255 Mask:255.255.255.0 | ||

| + | UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 </span> | ||

| + | |||

| + | We can see that haproxy2001 is using the virtual IP address 10.192.0.224 | ||

| + | |||

| + | On haproxy2002 we have: | ||

| + | root@haproxy2002:/etc/heartbeat# sudo ifconfig | ||

| + | ens32 Link encap:Ethernet HWaddr 00:0c:29:1e:92:b1 | ||

| + | inet addr:10.192.0.223 Bcast:10.192.0.255 Mask:255.255.255.0 | ||

| + | inet6 addr: fe80::20c:29ff:fe1e:92b1/64 Scope:Link | ||

| + | UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 | ||

| + | RX packets:1789179 errors:0 dropped:331 overruns:0 frame:0 | ||

| + | TX packets:1454996 errors:0 dropped:0 overruns:0 carrier:0 | ||

| + | collisions:0 txqueuelen:1000 | ||

| + | RX bytes:523204516 (523.2 MB) TX bytes:500107486 (500.1 MB) | ||

| + | If haproxy2001 goes done, haproxy2002 will pick up automatically the virtual IP address. Let us test that by turning off heartbeat on haproxy2001 | ||

| + | |||

| + | * haproxy2001 | ||

| + | root@haproxy2001:~# systemctl stop heartbeat | ||

| + | ens32 Link encap:Ethernet HWaddr 00:0c:29:97:0e:3b | ||

| + | inet addr:10.192.0.222 Bcast:10.192.0.255 Mask:255.255.255.0 | ||

| + | inet6 addr: fe80::20c:29ff:fe97:e3b/64 Scope:Link | ||

| + | UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 | ||

| + | RX packets:3406037 errors:0 dropped:628 overruns:0 frame:0 | ||

| + | TX packets:3515729 errors:0 dropped:0 overruns:0 carrier:0 | ||

| + | collisions:0 txqueuelen:1000 | ||

| + | RX bytes:861909566 (861.9 MB) TX bytes:868073629 (868.0 MB) | ||

| + | |||

| + | * haproxy2002 | ||

| + | root@haproxy2002:~# sudo ifconfig | ||

| + | ens32 Link encap:Ethernet HWaddr 00:0c:29:97:0e:3b | ||

| + | inet addr:10.192.0.223 Bcast:10.192.0.255 Mask:255.255.255.0 | ||

| + | inet6 addr: fe80::20c:29ff:fe97:e3b/64 Scope:Link | ||

| + | UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 | ||

| + | RX packets:3406037 errors:0 dropped:628 overruns:0 frame:0 | ||

| + | TX packets:3515729 errors:0 dropped:0 overruns:0 carrier:0 | ||

| + | collisions:0 txqueuelen:1000 | ||

| + | RX bytes:861909566 (861.9 MB) TX bytes:868073629 (868.0 MB) | ||

| + | <span style="color: red">ens32:0 Link encap:Ethernet HWaddr 00:0c:29:97:0e:3b | ||

| + | inet addr:10.192.0.224 Bcast:10.192.0.255 Mask:255.255.255.0 | ||

| + | UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 </span> | ||

| + | Now we have our load balancing and redundancy setup it is now to set up a test application node. | ||

| + | =Application node for testing= | ||

| + | For the application node, we are going to setup a web server using apache2 and PHP7 and follow the tutorial at https://www.taniarascia.com/create-a-simple-database-app-connecting-to-mysql-with-php/ to create an application that will connect to the database. | ||

| + | |||

| + | I am not going to go over the setup of the application since it is well explained in the link. I will covert the most important part which is how to connect the application to the database. | ||

| + | |||

| + | I have my web server address at 10.192.0.220 with the name web1.dfw.ppnet. In the application tutorial the file you need to modify to connect to the database is the "config.php" file. Since i am using apache2, all my files are under /var/www/html directory which is also my root directory. the config.php file looks like the one below. | ||

| + | |||

| + | <?php | ||

| + | /** | ||

| + | * Configuration for database connection | ||

| + | * | ||

| + | */ | ||

| + | <span style="color: red">$host = "10.192.0.224"; </span>. | ||

| + | <span style="color: red">$username = "root"; </span> | ||

| + | <span style="color: red">$password = "haproxy_user_password"; </span> | ||

| + | <span style="color: red">$dbname = "test"; // will use later </span> | ||

| + | $dsn = "mysql:host=$host;dbname=$dbname"; // will use later | ||

| + | $options = array( | ||

| + | PDO::ATTR_ERRMODE => PDO::ERRMODE_EXCEPTION | ||

| + | ); | ||

| + | |||

| + | $host = the haproxy virtual ip address | ||

| + | |||

| + | $username = the user to use to connect to the database we set this up in [[#Setting haproxy user on SQL nodes]] | ||

| + | |||

| + | $password = the pasword of $username we set this up in [[#Setting haproxy user on SQL nodes]] | ||

| + | |||

| + | $dbname = the name of the database you want to use | ||

| + | |||

| + | That is all you need to connect your application to your database. If everything is setup correctly, you should get the application open when you type in your server IP/index.php. See image below. | ||

| + | |||

| + | [[FILE:Web_app1.png]] | ||

| + | |||

| + | ==Testing== | ||

| + | * First test | ||

| + | Add new user. If it works with no problem, move to the next test. | ||

| + | * Second test | ||

| + | Stop mysql on db2004 or db2009 and try to read or write by creating or reading from the application | ||

| + | * Third test | ||

| + | while one of the mysql node is stopped, stop one of the data node too and try to read and write from the application | ||

| + | * Fourth test | ||

| + | stop heartbeat on haproxy2001 and try to read or write from the application. | ||

| + | |||

| + | All those tests will show you that the application will still be up and running if you have only; | ||

| + | - 1 sql node | ||

| + | - 1 data node | ||

| + | - 1 haproxy server | ||

| + | |||

| + | * Last test | ||

| + | on the mysql node where mysql was stopped, start back mysql | ||

| + | |||

| + | Bring back up the data node that was stopped | ||

| + | |||

| + | start heartbeat on haproxy2001 | ||

| + | |||

| + | login to the sql node that was stopped and you will see all the data will be there. | ||

| + | |||

| + | =Troubleshooting= | ||

| + | |||

| + | * Error on SQL nodes | ||

| + | |||

| + | If you get an error like the one below, you need to reload the management node | ||

| + | |||

| + | [ERROR] NDB: error (1) Configuration error: Error: Could not alloc node id at db2008.dfw.ppnet port 1186: Connection done from wrong host ip 10.192.16.29. | ||

| + | Here db2008.dfw.ppnet is the management node and 10.192.16.29 is the management node IP address. | ||

| + | |||

| + | Login to the management node and issue the command | ||

| + | |||

| + | sudo ndb_mgmd --reload --config-file /var/lib/mysql-cluster/config.ini | ||

| + | |||

| + | After that login back to your SQL node and start back the mysql services | ||

| + | |||

| + | sudo systemctl start mysql | ||

| + | |||

| + | =Maintenance= | ||

| + | to shutdown the whole cluster, login to the management node (db2008) at the shell type in | ||

| + | ppaul@db2008:~$ ndb_mgm -e shutdown | ||

| + | output | ||

| + | Connected to Management Server at: localhost:1186 | ||

| + | 3 NDB Cluster node(s) have shutdown. | ||

| + | Disconnecting to allow management server to shutdown. | ||

=References= | =References= | ||

| Line 571: | Line 851: | ||

https://dev.mysql.com/doc/mysql-cluster-excerpt/5.7/en/mysql-cluster-install-debian.html | https://dev.mysql.com/doc/mysql-cluster-excerpt/5.7/en/mysql-cluster-install-debian.html | ||

| + | |||

| + | https://www.taniarascia.com/create-a-simple-database-app-connecting-to-mysql-with-php/ | ||

=Conclusion= | =Conclusion= | ||

| + | We have discus about a whole working environment that you can put in production in this tutorial by setting up a NDB cluster with 5 nodes, 2 load balancing and 1 application node. Hope this tutorial was helpful. | ||

Latest revision as of 22:46, 12 July 2021

In the tutorial we will install MYSQL Network Database cluster (NDB) using 4 nodes.

Prerequisites

To complete this tutorial, you'll need the following:

- 1 management node

- 2 data nodes

- 1 SQL node

- mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64

Naming

db2004.dfw.ppnet = 1st SQL node

db2009.dfw.pppnet = 2nd SQL node

db2005.dfw.ppnet = 1st data node

db2006.dfw.ppnet= 2nd data node

db2008.dfw.ppnet= management node

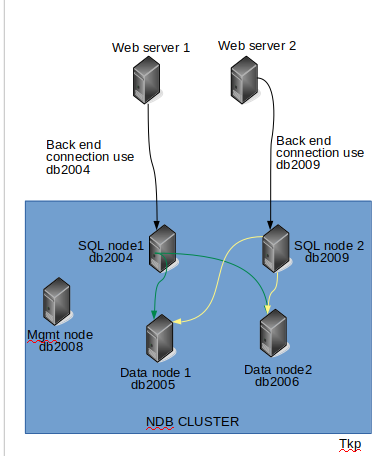

Diagram

Hardware/software requirements

I am running this in a VM environment. All nodes have 1Gb of RAM and 20GB of disk. To run this in a productions environment please refer to the MSQL NDB cluster documentation.

free -h

total used free shared buff/cache available

Mem: 996M 254M 167M 10M 574M 578M

Swap: 1.0G 5.4M 1.0G

df -h Filesystem Size Used Avail Use% Mounted on udev 488M 0 488M 0% /dev tmpfs 100M 11M 90M 11% /run /dev/sda1 19G 9.0G 8.8G 51% / tmpfs 499M 0 499M 0% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 499M 0 499M 0% /sys/fs/cgroup tmpfs 100M 0 100M 0% /run/user/2001

All 4 nodes are running Debian stretch

lsb_release -a No LSB modules are available. Distributor ID: Debian Description: Debian GNU/Linux 9.5 (stretch) Release: 9.5 Codename: stretch

Networking

IF you want to use the node FQDN you need to have a DNS server or update the /etc/hosts file; othewise, you can you the node IP address. For this tutorial We are gong to use the node FQDN.

- 1st SQL node

127.0.0.1 localhost 10.192.16.62 db2004.dfw.ppnet db2004

- 2nd SQL node

127.0.0.1 localhost 10.192.16.29 db2009.dfw.ppnet db2009

- 1st data node

127.0.0.1 localhost 10.192.16.63 db2005.dfw.ppnet db2005

- 2nd data node

127.0.0.1 localhost 10.192.16.64 db2006.dfw.ppnet db2006

- management node

127.0.0.1 localhost 10.192.16.61 db2008.dfw.ppnet db2008

Installation

login to each node and copy the scrip for each node to the appropriate node

Management node

sudo vi mgmt_node.sh

#!/bin/bash file="config.ini" #create a folder called files under /tmp/ echo " Creating folder" sleep 3 cd /tmp/ sudo mkdir files cd files #download the ndb_cluster software echo "Download ndb_cluster software" sleep 3 sudo wget https://dev.mysql.com/get/Downloads/MySQL-Cluster-7.6/mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64.tar.gz echo "unzip the tar file " sleep 3 sudo tar -xvf mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64.tar.gz #copy files" echo "copy ndb and ndb_mgmd to /usr/local/bin" sleep 3 cd mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64 cd bin sudo cp ndb_mgm /usr/local/bin/ sudo cp ndb_mgmd /usr/local/bin/ cd /usr/bin/ sudo chmod 755 ndb_mg* #create the configuration file echo " Create /var/lib/mysql-cluster/config.ini" sleep 3 sudo mkdir /var/lib/mysql-cluster/ cd /var/lib/mysql-cluster if [ ! -f "$file" ] ; then # if not create the file cat > $file << EOF [ndb_mgmd] # Management process options: hostname=db2008.dfw.ppnet # Hostname of the manager datadir=/var/lib/mysql-cluster # Directory for the log files [ndbd] hostname=db2005.dfw.ppnet # Hostname of the first data node datadir=/usr/local/mysql/data # Remote directory for the data files [ndbd] hostname=db2006.dfw.ppnet # Hostname of the second data node datadir=/usr/local/mysql/data # Remote directory for the data files [mysqld] # SQL node options: hostname=db2004.dfw.ppnet # Hostname of the 1st SQL node [mysqld] hostname=db2009.dfw.ppnet # Hostname of the 2nd SQL node EOF else echo "$file existe" fi #Start the manegement node echo "starting the manegement node" sleep 3 cd sudo ndb_mgmd -f /var/lib/mysql-cluster/config.ini --configdir=/var/lib/mysql-cluster/ #make the process to start a boot echo"start ndb_mgmd at boot" sleep 3 sudo echo "ndb_mgmd -f /var/lib/mysql-cluster/config.ini --configdir=/var/lib/mysql-cluster/" > /etc/init.d/ndb_mgmd sudo chmod 755 /etc/init.d/ndb_mgmd

Make the script executable

sudo chmod +x mgmt_node.sh

Run the script

sudo ./mgmt_node.sh

Set back and relax

After the script complete type command

ndb_mgm

The output should look the same as below

-- NDB Cluster -- Management Client -- ndb_mgm>

At the prompt, type "show" and you should see

ndb_mgm> show Connected to Management Server at: localhost:1186 Cluster Configuration --------------------- [ndbd(NDB)] 2 node(s) id=2 (not connected, accepting connect from db2005.dfw.ppnet) id=3 (not connected, accepting connect from db2006.dfw.ppnet) [ndb_mgmd(MGM)] 1 node(s) id=1 @10.192.16.61 (mysql-5.7.23 ndb-7.6.7) [mysqld(API)] 2 node(s) id=4 (not connected, accepting connect from db2004.dfw.ppnet) id=5 (not connected, accepting connect from db2009.dfw.ppnet)

We can see that the management node is up and running and the data nodes and SQL nodes are stay down. In the next session we are going to setup the data nodes Note: If you are using UFW make sure that port 1186 is open on the mgmt node.

Data node

Create a file on you data nodes and copy and paste the script below.

sudo vi data_node.sh #!/bin/bash file="my.cnf" #create a folder called files under /tmp/ echo " Creating folder" sleep 3 cd /tmp/ sudo mkdir files cd files #download the ndb_cluster software echo "Download ndb_cluster software" sleep 3 sudo wget https://dev.mysql.com/get/Downloads/MySQL-Cluster-7.6/mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64.tar.gz echo "unzip the tar file " sleep 3 sudo tar -xvf mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64.tar.gz cd mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64/bin #copy files ndbd and ndbmtd to /usr/local/bin sudo cp ndbd /usr/local/bin sudo cp ndbmtd /usr/local/bin cd /usr/local/bin sudo chmod 755 ndb* #create the my.cnf file /etc/my.cnf cd /etc/ if [ ! -f "$file" ] ; then # if not create the file cat > $file << EOF [mysqld] ndbcluster [mysql_cluster] ndb-connectstring=db2008.dfw.ppnet EOF else echo "$file existe" fi #Create the data directory sudo mkdir -p /usr/local/mysql/data #start the service cd sudo ndbd

save the file and close it. Make the file executable

sudo chmod +x data._node.sh

run the file

sudo ./data_node.sh

After the installation you should get the output below

2018-09-19 00:06:16 [ndbd] INFO -- Angel connected to 'db2008.dfw.ppnet:1186' 2018-09-19 00:06:16 [ndbd] INFO -- Angel allocated nodeid: 2

Repeat the above steps for each data node. Once done, login to your management node to check the 2 data nodes

- check the management server

ppaul@db2008:~$ ndb_mgm -- NDB Cluster -- Management Client -- ndb_mgm> show Connected to Management Server at: localhost:1186 Cluster Configuration --------------------- [ndbd(NDB)] 2 node(s) id=2 @10.192.16.63 (mysql-5.7.23 ndb-7.6.7, Nodegroup: 0) id=3 @10.192.16.64 (mysql-5.7.23 ndb-7.6.7, Nodegroup: 0, *) [ndb_mgmd(MGM)] 1 node(s) id=1 @10.192.16.61 (mysql-5.7.23 ndb-7.6.7) [mysqld(API)] 1 node(s) id=4 (not connected, accepting connect from db2004.dfw.ppnet) id=5 (not connected, accepting connect from db2009.dfw.ppnet) ndb_mgm5

We see that the 2 data nodes are up and running. In the next step, we will setup the mysql_node

SQL node

Login to you sql node (my case db2004) as root and create a file called "sql_node.sh" and copy and paste the content below into the file.

sudo vi sql_node.sh

#!/bin/bash ###sql node ##run as root## file="my.cnf" #Create the mysql user and group echo "Add user and group mysql" sleep 3 groupadd mysql useradd -g mysql mysql #create a folder called files under /tmp/ echo "Creating folder" sleep 3 cd /tmp/ mkdir files cd files #Download the ndb_cluster software echo "Download ndb_cluster software" sleep 3 sudo wget https://dev.mysql.com/get/Downloads/MySQL-Cluster-7.6/mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64.tar.gz echo "unzip the tar file " sleep 3 sudo tar -xvf mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64.tar.gz mv mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64/ /usr/local/ #Create symbolic link echo "reate symbolic link" cd /usr/local ln -s mysql-cluster-gpl-7.6.7-linux-glibc2.12-x86_64/ /usr/local/mysql apt-get install libaio1 apt-get install libaio-dev apt-get install libnuma1 #Change to mysql directory, initialize Mysql echo "Initilize MYSQL" sleep 3 cd /usr/local/mysql/bin/ ./mysqld --initialize \ --user=mysql \ --basedir=/usr/local/mysql \ --datadir=/usr/local/mysql/data #Relocate the bin repectory under /usr/local/mysql/bin cd /usr/local/mysql/bin mv * /usr/bin cd ../ rm -fr /usr/local/mysql/bin ln -s /usr/bin /usr/local/mysql/bin #Set permission for root and mysql users echo "Setting permission" sleep 3 cd /usr/local/ chown -R root:root mysql cd /usr/local/mysql chown -R mysql:mysql data/ #set mysqld to start at boot echo "mysqld sartup" sleep 3 cd /usr/local/mysql/ cp support-files/mysql.server /etc/init.d/ chmod 755 /etc/init.d/mysql.server #create the my.cnf file /etc/my.cnf cd /etc/ if [ ! -f "$file" ] ; then # if not create the file cat > $file << EOF [mysqld] ndbcluster default_storage_engine = NDBCLUSTER [mysql_cluster] ndb-connectstring=db2008.dfw.ppnet [mysqld] basedir=/usr/local/mysql EOF else echo "$file existe" fi #Start MYSQL" echo "starting mysql" sleep 3 /etc/init.d/mysql.server start

Save the file and make it executable

chmod +x sql_node.sh

Run the file

./sql_node.sh

After completion, you will see a line that says; "[Note] A temporary password is generated for root@localhost: taE2*BEj;,:N" Copy and paste the temporary password somewhere. we will change this in the next step.

Repeat the above steps for each SQL node. Once done, login to your management node to check the 2 SQL nodes

Verification

login to you management node once again and type "ndb_mgm" then "show"

ppaul@db2008:~$ ndb_mgm -- NDB Cluster -- Management Client -- ndb_mgm> show Connected to Management Server at: localhost:1186 Cluster Configuration --------------------- [ndbd(NDB)] 2 node(s) id=2 @10.192.16.63 (mysql-5.7.23 ndb-7.6.7, Nodegroup: 0) id=3 @10.192.16.64 (mysql-5.7.23 ndb-7.6.7, Nodegroup: 0, *) [ndb_mgmd(MGM)] 1 node(s) id=1 @10.192.16.61 (mysql-5.7.23 ndb-7.6.7) [mysqld(API)] 1 node(s) id=4 @10.192.16.62 (mysql-5.7.23 ndb-7.6.7) id=5 @10.192.16.29 (mysql-5.7.23 ndb-7.6.7) ndb_mgm>

We can see that we now have the sql nodes up

Changing the default root password

Login to your SQL node as root and issue the command " mysql -u root -p" when prompt, enter or copy and paste the temporary password. At the mysql prompt, type "set password = 'your_new_password'"

root@db2004:~# mysql -u root -p Enter password: Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 4 Server version: 5.7.23-ndb-7.6.7-cluster-gpl Copyright (c) 2000, 2018, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> set password = 'your_new_password'; Query OK, 0 rows affected (0.14 sec) mysql>

Now that we have all the nodes setup and running is time to run some test.

Testing

Test1

- We are going to create on the sql node1 a database name sql_node1

- Do a show databases to see if the database is create

- login to sql node 2 and check if the database sql_node1 exist

1st SQL node (db2004)

root@db2004:~# mysql -u root -p Enter password: Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 6 Server version: 5.7.23-ndb-7.6.7-cluster-gpl MySQL Cluster Community Server (GPL) Copyright (c) 2000, 2018, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> create database sql_node1; Query OK, 1 row affected (0.05 sec) mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | db_sqnode | | mysql | | mysqlclustertest | | ndbinfo | | performance_schema | | sql_node1 | | sys | +--------------------+ 8 rows in set (0.00 sec) mysql>

2nd SQL node (db2009)

root@db2009:~# mysql -u root -p Enter password: Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 5 Server version: 5.7.23-ndb-7.6.7-cluster-gpl MySQL Cluster Community Server (GPL) Copyright (c) 2000, 2018, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | db_sqnode | | mysql | | mysqlclustertest | | ndbinfo | | performance_schema | | sql_node1 | | sys | +--------------------+ 8 rows in set (0.01 sec) mysql>

Test2

- We are going to create on the sql node2 a database name sql_node2

- Do a show databases to see if the database is create

- login to sql node 1 and check if the database sql_node2 exist.

sql node2 (db2009)

root@db2009:~# mysql -u root -p Enter password: Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 7 Server version: 5.7.23-ndb-7.6.7-cluster-gpl MySQL Cluster Community Server (GPL) Copyright (c) 2000, 2018, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> create database sql_node2; Query OK, 1 row affected (0.43 sec) mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | db_sqnode | | mysql | | mysqlclustertest | | ndbinfo | | performance_schema | | sql_node1 | | sql_node2 | | sys | +--------------------+ 9 rows in set (0.00 sec) mysql>

sql node1 (dd2004)

root@db2004:~# mysql -u root -p Enter password: Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 8 Server version: 5.7.23-ndb-7.6.7-cluster-gpl MySQL Cluster Community Server (GPL) Copyright (c) 2000, 2018, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | db_sqnode | | mysql | | mysqlclustertest | | ndbinfo | | performance_schema | | sql_node1 | | sql_node2 | | sys | +--------------------+ 9 rows in set (0.00 sec) mysql>

We can see that what matter what SQL node we pick to use, we have the same data on both nodes.

Management

How to add a new SQL or data node

- Step 1

Before you add a new SQL or data node, make sure if you are using an IP that the management node can ping the new node and the new node can ping also the management node. If you are using the FQDN or hostanme, make sure that name can be resolved by both the management node and the new node.

- step 2

Login to your management node and stop the management node with the command "1 stop" 1 being the node ID

ndb_mgm> show

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=2 @10.192.16.63 (mysql-5.7.23 ndb-7.6.7, starting, Nodegroup: 0)

id=3 @10.192.16.64 (mysql-5.7.23 ndb-7.6.7, Nodegroup: 0, *)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @10.192.16.61 (mysql-5.7.23 ndb-7.6.7)

[mysqld(API)] 2 node(s)

id=4 @10.192.16.62 (mysql-5.7.23 ndb-7.6.7)

id=5 @10.192.16.29 (mysql-5.7.23 ndb-7.6.7)

ndb_mgm> Node 2: Started (version 7.6.7)

ndb_mgm> 1 stop

Node 1 has shutdown.

Disconnecting to allow Management Server to shutdown

- Step 3

Navigate to your config.ini file and add the new node to the file

[ndb_mgmd] # Management process options: hostname=db2008.dfw.ppnet # Hostname of the manager datadir=/var/lib/mysql-cluster # Directory for the log files [ndbd] hostname=db2005.dfw.ppnet # Hostname of the first data node datadir=/usr/local/mysql/data # Remote directory for the data files [ndbd] hostname=db2006.dfw.ppnet # Hostname of the second data node datadir=/usr/local/mysql/data # Remote directory for the data files [ndbd] hostname=new_Data node name/IP # Hostname of the third data node datadir=/usr/local/mysql/data # Remote directory for the data files #SQL node options [mysqld] hostname=db2004.dfw.ppnet #SQL node1 [mysqld] hostname=db2009.dfw.ppnet #SQL node2 [mysqld] hostname=new_sql node name/IP #SQL node3

save the file and exit.

- Step 4

Reload the config file

sudo ndb_mgmd -f /var/lib/mysql-cluster/config.ini --reload

- Step5

if you are adding a data node, follow the step on how to add a data node

if you are adding a SQL node, follow the step on how to add a sql node

This complete the step on adding a new node.

Maintenance

This is perform on the management node

- shutting down the whole cluster

ndb_mgm> shutdown

- shut down a single node

node id stop

If mysql is stay running on the SQL nodes, and you have 2 data nodes like in this tutorial, you can not shut down both data nodes. This is to prevent data lost or application error. so if you have for example:

2 data nodes you can shut down only 1 node 3 data nodes you can shut down 2 nodes

Scenario 1

Now that we have our cluster fully setup, we are going to discuss about what happen at the application level.

if we do have for example 2 application nodes (web1 and web2) running a simple application to create a new user, we will have to setup web1 to connect to SQL node 1 and web2 to connect to SQL node 2. In this scenario, if one of the SQL node is not available, the application connecting to that particular SQL node will fail.

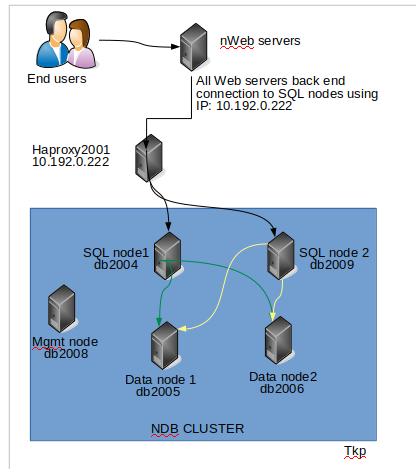

Scenario 2

To address the issue in scenario 1, we will have to add a haproxy node between the application and the SQL nodes. The application will connect to the haproxy node and the haproxy will balance the load to each SQL node. in case one of the SQL node is not available, the haproxy node knows how to connect all the application nodes to the available SQL node. The problem here is that, if the haproxy node is not availlable, the application nodes will fail.

Scenario 3

To address the issue in scenario 2, we will add another haproxy node.The first haproxy will be in active mode and the second one in passive mode. We will setup heartbeat on both haproxy nodes and setup a virtual IP between both node. IF the active node goes down automatically, the passive node take over and become the active node and if the active node comes back up, it takes over the active node role and the passive node goes back to the passive mode role.

Setting up haproxy

Setting haproxy user on SQL nodes

Login to your SQL nodes and run the commands below

create user 'haproxy_check_user'@'haproxy2001.dfw.ppnet'; grant all privileges on *.* to 'root'@'haproxy2001.dfw.ppnet' identified by 'your_password' with grant option; create user 'haproxy_check_user'@'haproxy2002.dfw.ppnet'; grant all privileges on *.* to 'root'@'haproxy2002.dfw.ppnet' identified by 'your_password' with grant option;

Installing haproxy

Login to both your haproxy servers and run the command

sudo apt-get install haproxy

Configuring haproxy

Navigate to

/etc/haproxy

and open the haproxy configuration file "haproxy.cfg"

vi haproxy.cfg

Copy and paste the code below at the end of the file. This needs to be done on both haproxy servers

# HAProxy's stats

listen stats

bind 10.192.0.224:8880

stats enable

stats hide-version

stats uri /

stats realm HAProxy Statistics

stats auth user_name:user_name_password

listen webfarm

bind 10.192.0.224:3306

mode tcp

balance roundrobin

option mysql-check user haproxy_check

server SQL1 db2004.dfw.ppnet:3306 check fall 2 inter 1000

server SQL2 db2009.dfw.ppnet:3306 check fall 2 inter 1000

- stats auth = The username and password we will use to login to the haproxy WEB GUI

- 10.192.0.224 = The virtual IP address we will use for heartbeat in the next section

Installing heartbeat

Login to both your haproxy servers and run the command

sudo apt-get install heartbeat

For the heartbeat there are a couple of files we need to work on. This needs to be done also on both haproxy servers

configuring heartbeat

- On haproxy2001

Navigate to /etc/heartbeat and open authkeys; use the link below to generate a md5 hash and past it into the file https://www.md5hashgenerator.com/ - authkeys

auth 3 3 md5 75cbfa4f4f9f0606171f7bc3c61905c7 -

Save and close the file.

The next file to open is "ha.cf" in the ucast session enter you NIC name and the ip address and at the bottom of the file put the name of both haproxy servers - ha.cf

# # keepalive: how many seconds between heartbeats # keepalive 2 # # deadtime: seconds-to-declare-host-dead # deadtime 10 # # What UDP port to use for udp or ppp-udp communication? # udpport 694 bcast ens34 mcast ens34 225.0.0.1 694 1 0 ucast ens34 10.192.0.222 # What interfaces to heartbeat over? #udp eth0 # # Facility to use for syslog()/logger (alternative to log/debugfile) # logfacility local0 # # Tell what machines are in the cluster # node nodename ... -- must match uname -n node haproxy2001 node haproxy2002

The last file to edit is "haresources. Here you have the option to use the haproxy2001 or the haproxy2002 name it really doesn't matter. But it has to be the same on both haproxy's, and 10.192.0.224 in my case is the Virtual IP, replace this with your IP.

- haresources

haproxy2001 10.192.0.224

- On haproxy2002

- authkeys

auth 3 3 md5 75cbfa4f4f9f0606171f7bc3c61905c7 -

- ha.cf

# # keepalive: how many seconds between heartbeats # keepalive 2 # # deadtime: seconds-to-declare-host-dead # deadtime 10 # # What UDP port to use for udp or ppp-udp communication? # udpport 694 bcast ens34 mcast ens34 225.0.0.1 694 1 0 ucast ens34 10.192.0.223 # What interfaces to heartbeat over? #udp eth0 # # Facility to use for syslog()/logger (alternative to log/debugfile) # logfacility local0 # # Tell what machines are in the cluster # node nodename ... -- must match uname -n node haproxy2001 node haproxy2002

- haresources

haproxy2001 10.192.0.224

We are done with the configuration

Checking

Login to your 1st haproxy server (haproxy2001) and type in sudo ifconfig

root@haproxy2001:~# sudo ifconfig

ens32 Link encap:Ethernet HWaddr 00:0c:29:97:0e:3b

inet addr:10.192.0.222 Bcast:10.192.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe97:e3b/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:3406037 errors:0 dropped:628 overruns:0 frame:0

TX packets:3515729 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:861909566 (861.9 MB) TX bytes:868073629 (868.0 MB)

ens32:0 Link encap:Ethernet HWaddr 00:0c:29:97:0e:3b

inet addr:10.192.0.224 Bcast:10.192.0.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

We can see that haproxy2001 is using the virtual IP address 10.192.0.224

On haproxy2002 we have:

root@haproxy2002:/etc/heartbeat# sudo ifconfig

ens32 Link encap:Ethernet HWaddr 00:0c:29:1e:92:b1

inet addr:10.192.0.223 Bcast:10.192.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe1e:92b1/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1789179 errors:0 dropped:331 overruns:0 frame:0

TX packets:1454996 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:523204516 (523.2 MB) TX bytes:500107486 (500.1 MB)

If haproxy2001 goes done, haproxy2002 will pick up automatically the virtual IP address. Let us test that by turning off heartbeat on haproxy2001

- haproxy2001

root@haproxy2001:~# systemctl stop heartbeat

ens32 Link encap:Ethernet HWaddr 00:0c:29:97:0e:3b

inet addr:10.192.0.222 Bcast:10.192.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe97:e3b/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:3406037 errors:0 dropped:628 overruns:0 frame:0

TX packets:3515729 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:861909566 (861.9 MB) TX bytes:868073629 (868.0 MB)

- haproxy2002

root@haproxy2002:~# sudo ifconfig

ens32 Link encap:Ethernet HWaddr 00:0c:29:97:0e:3b

inet addr:10.192.0.223 Bcast:10.192.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe97:e3b/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:3406037 errors:0 dropped:628 overruns:0 frame:0

TX packets:3515729 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:861909566 (861.9 MB) TX bytes:868073629 (868.0 MB)

ens32:0 Link encap:Ethernet HWaddr 00:0c:29:97:0e:3b

inet addr:10.192.0.224 Bcast:10.192.0.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Now we have our load balancing and redundancy setup it is now to set up a test application node.

Application node for testing

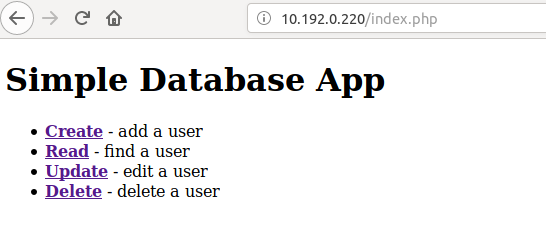

For the application node, we are going to setup a web server using apache2 and PHP7 and follow the tutorial at https://www.taniarascia.com/create-a-simple-database-app-connecting-to-mysql-with-php/ to create an application that will connect to the database.

I am not going to go over the setup of the application since it is well explained in the link. I will covert the most important part which is how to connect the application to the database.

I have my web server address at 10.192.0.220 with the name web1.dfw.ppnet. In the application tutorial the file you need to modify to connect to the database is the "config.php" file. Since i am using apache2, all my files are under /var/www/html directory which is also my root directory. the config.php file looks like the one below.

<?php /** * Configuration for database connection * */ $host = "10.192.0.224"; . $username = "root"; $password = "haproxy_user_password"; $dbname = "test"; // will use later $dsn = "mysql:host=$host;dbname=$dbname"; // will use later $options = array( PDO::ATTR_ERRMODE => PDO::ERRMODE_EXCEPTION );

$host = the haproxy virtual ip address

$username = the user to use to connect to the database we set this up in #Setting haproxy user on SQL nodes

$password = the pasword of $username we set this up in #Setting haproxy user on SQL nodes

$dbname = the name of the database you want to use

That is all you need to connect your application to your database. If everything is setup correctly, you should get the application open when you type in your server IP/index.php. See image below.

Testing

- First test

Add new user. If it works with no problem, move to the next test.

- Second test

Stop mysql on db2004 or db2009 and try to read or write by creating or reading from the application

- Third test

while one of the mysql node is stopped, stop one of the data node too and try to read and write from the application

- Fourth test

stop heartbeat on haproxy2001 and try to read or write from the application.

All those tests will show you that the application will still be up and running if you have only; - 1 sql node - 1 data node - 1 haproxy server

- Last test

on the mysql node where mysql was stopped, start back mysql

Bring back up the data node that was stopped

start heartbeat on haproxy2001

login to the sql node that was stopped and you will see all the data will be there.

Troubleshooting

- Error on SQL nodes

If you get an error like the one below, you need to reload the management node

[ERROR] NDB: error (1) Configuration error: Error: Could not alloc node id at db2008.dfw.ppnet port 1186: Connection done from wrong host ip 10.192.16.29.

Here db2008.dfw.ppnet is the management node and 10.192.16.29 is the management node IP address.

Login to the management node and issue the command

sudo ndb_mgmd --reload --config-file /var/lib/mysql-cluster/config.ini

After that login back to your SQL node and start back the mysql services

sudo systemctl start mysql

Maintenance

to shutdown the whole cluster, login to the management node (db2008) at the shell type in

ppaul@db2008:~$ ndb_mgm -e shutdown

output

Connected to Management Server at: localhost:1186 3 NDB Cluster node(s) have shutdown. Disconnecting to allow management server to shutdown.

References

https://smeretech.com/install-mysql-cluster-debian/

https://clusterengine.me/how-to-install-mysql-ndb-cluster-on-linux/

https://dev.mysql.com/doc/mysql-cluster-excerpt/5.7/en/mysql-cluster-install-debian.html

https://www.taniarascia.com/create-a-simple-database-app-connecting-to-mysql-with-php/

Conclusion

We have discus about a whole working environment that you can put in production in this tutorial by setting up a NDB cluster with 5 nodes, 2 load balancing and 1 application node. Hope this tutorial was helpful.